Investing in the age of AI

What you need to know and which companies will benefit the most

Intro

I have spent a lot of time thinking about AI and its implications for both the economy and individual companies over the last weeks. While learning more about AI I came across this excellent article by Benjamin Todd which you will find here: https://80000hours.org/agi/guide/when-will-agi-arrive/

He closes the article with the following words:

Today’s situation feels like February 2020 just before COVID lockdowns: a clear trend suggested imminent, massive change, yet most people continued their lives as normal.

I believe we have to take both the good and the bad implications of AI very seriously and my goal is that you know more about the current state of AI after you finish reading the article. Learn in the second part of this article which companies will benefit from the rise of AI and which companies will face a tough time.

Takeaways from Benjamin Todd’s article

I summarise the article from Benjamin in this section. In case you want to jump directly to the next section when I talk about companies, go ahead.

The following paragraphs are mostly direct quotes from his article. I believe that the article is too good to paraphrase his words and I will instead quote him. The term AGI short for Artificial General Intelligence is used to describe human-level intelligence or even beyond that.

Where we draw the ‘AGI’ line is ultimately arbitrary. What matters is these models could start to accelerate AI research itself, unlocking vastly greater numbers of more capable ‘AI workers’. In turn, sufficient automation could trigger explosive growth and 100 years of scientific progress in 10 — a transition society isn’t prepared for.

Four key factors are driving AI progress: larger base models, teaching models to reason, increasing models’ thinking time, and building agent scaffolding for multi-step tasks. These are underpinned by increasing computational power to run and train AI systems, as well as increasing human capital going into algorithmic research.

Benjamin is making the interesting point, that the growth of AI can be limited by a shortage of AI researchers. A take I have rarely heard until now.

Increasing AI performance requires exponential growth in investment and the research workforce. At current rates, we will likely start to reach bottlenecks around 2030. Simplifying a bit, that means we’ll likely either reach AGI by around 2030 or see progress slow significantly. Hybrid scenarios are also possible, but the next five years seem especially crucial.

The computing power applied to AI has been growing faster than the famous Moore’s Law, which states that the number of transistors in an integrated circuit doubles roughly every two years. This concept has proven to be very much alive over all those years:

The computing power of the best chips has grown about 35% per year since the beginnings of the industry, known as Moore’s Law. However, the computing power applied to AI has been growing far faster, at over 4-times per year.

Part of the reason that computing is growing faster for AI than Moore’s Law is the fantastic progress that has been made in the field of GPUs, namely Nvidia but also increasingly by AMD. Learn more about the investment case of AMD here:

At the same time, the cost of training AI models is getting increasingly expensive and only a handful of companies in the world can afford this large spending. You will find my take on some of these companies here:

The CEO of Anthropic, Dario Amodei, projects GPT-6-sized models will cost about $10bn to train. That’s still affordable for companies like Google, Microsoft, or Meta, which earn $50–100bn in profits annually.

The progress that has been made in the field of AI and LLMs is incredible. I urge you to try to use some models and ask them more complicated questions. I myself recently used ChatGPT to get renderings of the bathroom renovation that I was planning and I was surprised by how well the generated images turned out to be.

Most people aren’t answering PhD-level science questions in their daily life, so they simply haven’t noticed recent progress. They still think of LLMs as basic chatbots. But o1 was just the start. At the beginning of a new paradigm, it’s possible to get gains especially quickly.

AI can increasingly think for longer periods of time. In the past LLMs were very restricted. If I expect you to answer me complicated questions in 5 minutes, 10 hours, or one month I will get very different answers from you. The same applies to AI:

If you could think for a month, you’ll make a lot more progress — even though your raw intelligence isn’t higher. At current rates, we’ll soon have models that can think for a month — and then a year.

There are new ways developed on how to train AI. One of these is called distillation:

AI researchers may be able to use this technique to create another flywheel for AI research. It’s a process called iterated distillation and amplification, which you can read about here. Here’s roughly how it would work:

Have your model think for longer to get better answers (‘amplification’).

Use those answers to train a new model. That model can now produce almost the same answers immediately without needing to think for longer (‘distillation’).

Now have the new model think for longer. It’ll be able to generate even better answers than the original.

Repeat.

This process is essentially how DeepMind made AlphaZero superhuman at Go within a couple of days, without any human data.

Instead of just chatbots, Agents are a new and important concept. An agent is close to what humans are capable of since it does more than just finish a single task.

But the AI companies are now turning chatbots into agents.

An AI ‘agent’ is capable of doing a long chain of tasks in pursuit of a goal.

For example, if you want to build an app, rather than asking the model for help with each step, you simply say, “Build an app that does X.” It then asks clarifying questions, builds a prototype, tests and fixes bugs, and delivers a finished product — much like a human software engineer.

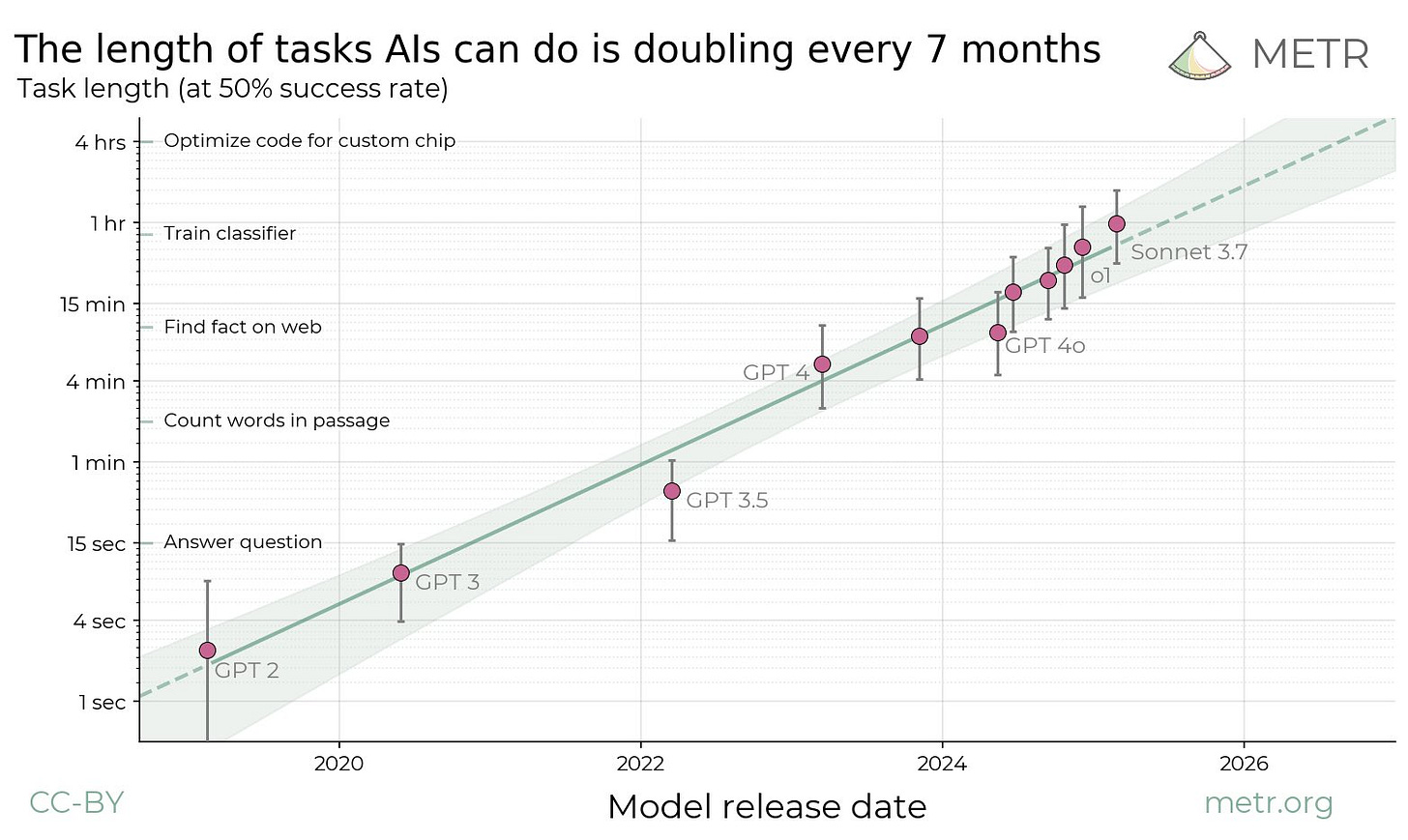

The length of tasks that AI can do is growing exponentially. ChatGPT’s o3 model (I have no idea how they came up with these names) can already do tasks that are 2,3x as long as the previous o1 model.

These graphs above explain why, although AI models can be very ‘intelligent’ at answering questions, they haven’t yet automated many jobs.

Most jobs aren’t just lists of discrete one hour tasks –– they involve figuring out what to; do coordinating with a team; long, novel projects with a lot of context, etc.

Even in one of AI’s strongest areas — software engineering –– today it can only do tasks that take under an hour. And it’s still often tripped up by things like finding the right button on a website. This means it’s a long way from being able to fully replace software engineers.

However, the trends suggest there’s a good chance that soon changes. An AI that can do 1-day or 1-week tasks would be able to automate dramatically more work than current models. Companies could start to hire hundreds of ‘digital workers’ overseen by a small number of humans.

Based on all these breadcrumbs Benjamin expects the following and to be honest, these projections are both scary and exciting.

This implies that in two years we should expect AI systems that:

Have expert-level knowledge of every field

Can answer math and science questions as well as many professional researchers

Are better than humans at coding

Have general reasoning skills better than almost all humans

Can autonomously complete many day long tasks on a computer

And are still rapidly improving

The next leap might take us into beyond-human-level problem solving — the ability to answer as-yet-unsolved scientific questions independently.

Obviously some challenges need to be conquered for us to reach this state:

Many bottlenecks hinder real-world AI agent deployment, even for those that can use computers. These include regulation, reluctance to let AIs make decisions, insufficient reliability, institutional inertia, and lack of physical presence.41

Initially, powerful systems will also be expensive, and their deployment will be limited by available compute, so they will be directed only at the most valuable tasks.

This means most of the economy will probably continue pretty much as normal for a while. You’ll still consult human doctors (even if they use AI tools), get coffee from human baristas, and hire human plumbers.

However, there are a few crucial areas where, despite these bottlenecks, these systems could be rapidly deployed with significant consequences.

Finally the case against tremendous AI progress by 2030:

First, concede that AI will likely become superhuman at clearly defined, discrete tasks, which means we’ll see continued rapid progress on benchmarks. But argue it’ll remain poor at ill-defined, high-context, and long-time-horizon tasks. That’s because these kinds of tasks don’t have clearly and quickly verifiable answers, and so they can’t be trained with reinforcement learning, and they’re not contained in the training data either. Second, argue that most knowledge jobs consist significantly of these long-horizon, messy, high-context tasks.

So what is the most likely outcome in the next years?

Right now, the total compute available for training and running AI is growing 3x per year,45 and the workforce is growing rapidly too.

This means that each year, the number of AI models that can be run increases 3x. In addition, three times more compute can be used for training, and that training can use better algorithms, which means they get more capable as well as more numerous.

Earlier, I argued these trends can continue until 2028. But now I’ll show it most likely runs into bottlenecks shortly thereafter.

Google, Microsoft, Meta etc. are spending tens of billions of dollars to build clusters that could train a GPT-6-sized model in 2028.

Another 10x scale up would require hundreds of billions of investment. That’s do-able, but more than their current annual profits and would be similar to another Apollo Program or Manhattan Project in scale

Power: Current levels of AI chip sales, if sustained, mean that AI chips will use 4%+ of US electricity by 202847, but another 10x scale up would be 40%+. This is possible, but it would require building a lot of power plants.

The paragraph above leaves two scenarios for us:

Either we hit AI that can cause transformative effects by ~2030: AI progress continues or even accelerates, and we probably enter a period of explosive change.

Or progress will slow: AI models will get much better at clearly defined tasks, but won’t be able to do the ill-defined, long horizon work required to unlock a new growth regime. We’ll see a lot of AI automation, but otherwise the world will look more like ‘normal’.

I really enjoyed his article and the great way that Benjamin summarizes and projects the future of AI.

The effect of AI on companies today

There is the good, the bad, and the ugly. Let’s start with the ugly: Some business models have been already rendered obsolete by AI or at least have taken severe damage.

Think of Chegg, an education company that sells tutoring and study materials. Chegg profited big time from Covid and all the stay-at-home policies. In Chegg’s case, however, the storm followed the sunshine immediately and the rise of ChatGPT hurt the company severely. Why pay for a tutor, if you can get one with ChatGPT for free? The chart of stock prices tells you everything you need to know.

Let’s add insult to injury and ask ChatGPT what kind of effect it had on Chegg:

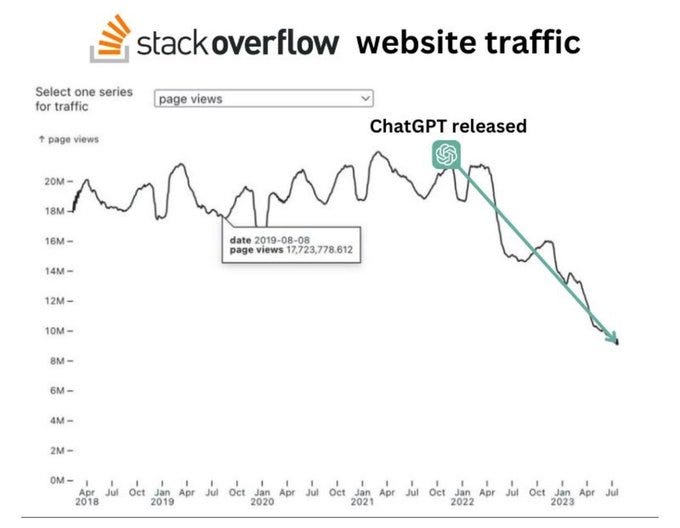

Other companies or websites such as StackOverflow can clearly point to the release of ChatGPT. I wrote about this here as well

Alphabet’s CEO stated late last year that AI now writes 25% of all code and Microsoft CEO Satya Nadella stated in April, that AI generates 30% of all code written at Microsoft. I spoke with programmers, and while they claim that AI is merely finishing code that humans start to write, it is nevertheless a significant change in how work is being done.

The losers of AI

Some companies are clear losers and Chegg as I pointed out before is one of them. Thinking about other companies that could lose, these come to mind:

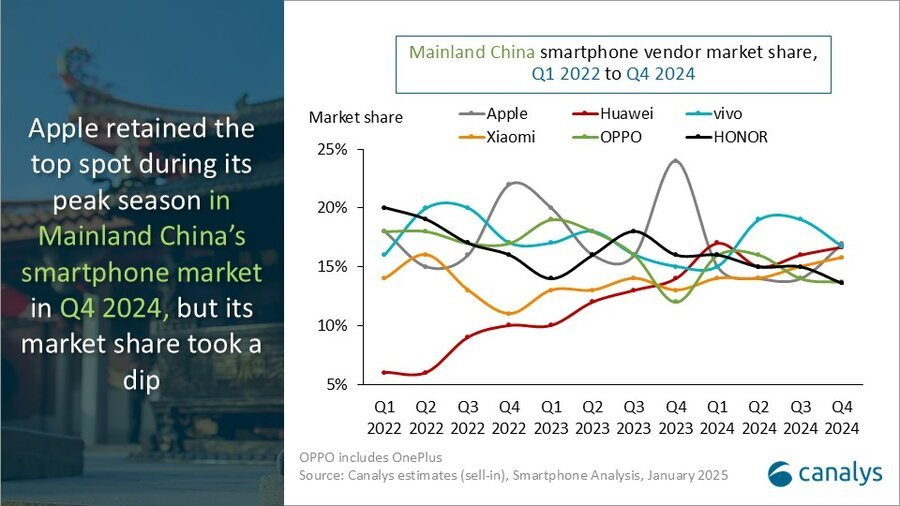

Apple

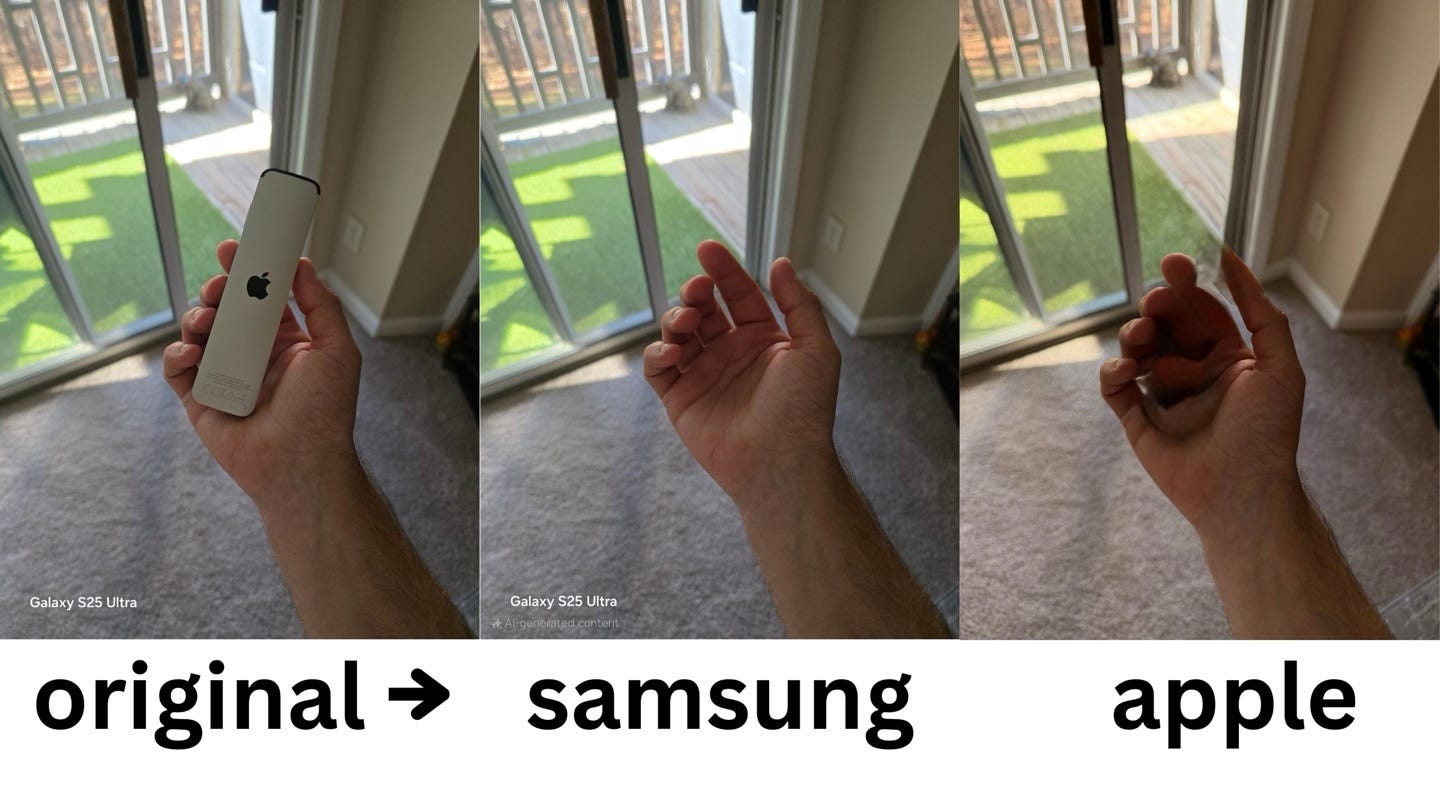

This one might be a surprise since Apple is a tech company. As of today, Apple can’t show any major progress in terms of AI. And I can’t take the Genmoji approach seriously. If all you can show are some birds, you might as well just not show anything.

In terms of AI capabilities, Apple seriously lacks its competitors. This picture below is just one of many where users compare Apple AI with other companies.

Especially in the Chinese market the AI capabilities of phones (and cars) are a serious decision-maker for purchases. Apart from AI, Apple seems to be a major loser in the whole tariff war. President Trump insists on iPhones being manufactured in the US and if the Chinese public takes a harder stance against US companies, Apple will be on the losing side.

Outsourcing companies such as Fiverr

Fiverr helps individuals and companies to find the right expert for a project. This can be anything from designing a logo to building a whole website. With the help of AI, many potential users of this platform will instead fulfill the task with the help of an AI tool. I toyed around with ChatGPT to create a logo and was surprised by how well it worked. Why then employ an external consultant?

Shallow news

This is mostly about websites that give you a headline and some easy-to-digest content. In the future, these articles will be written by AI and because of that, any person can start such a website. Maybe this is a great new field to venture into.

The unaffected

You can follow Warren Buffett and his advice on investing in topics that are unaffected by change. Replace “internet” with “AI” and the sentence still stands valid today.

Our approach is very much profiting from lack of change rather than from change. With Wrigley chewing gum, it's the lack of change that appeals to me. I don't think it is going to be hurt by the Internet. That's the kind of business I like.

Apart from some consumer stables such as Cola, Snickers, and Co, there are some more things that are mostly unaffected by the rise of AI: Everything that is very labor intensive and can only be done on-site is not really affected by AI. Think of your local plumber or electrician. As much as I would love to fix things at home with only the help of ChatGPT and Co, I cannot do these things on my own because they either require expert knowledge, or the right tools or are just too dangerous for an amateur.

Public companies that come to mind are Home Depot, Lowes, Floor and Decor, and other hardware retailers. You will still need their tools and articles to do renovation at home. Hardware is hard to be replaced by AI. The same applies to companies with a wide store network such as O'Reilly Automotive (a company where I followed the stock now for many years without ever buying any shares).

Other retailers include grocery stores (think Walmart or Sprouts Farmers Market). Please don’t think about drug stores such as Walgreens, since Walgreens has more issues than you can count. What a sad chart. Soon it will be taken private and you can bet on massive store closures.

The Winners

I am sure that is the part you waited for the whole time. Some clear winners profit from the rise of AI.

Let’s start with the obvious ones and let’s follow the old saying: “During a gold rush, sell shovels”. Just like in the great gold rush in California, the people who made the most money were those who sold shovels and jeans to all those people hoping for the one big hit.

Samuel Brannan was California’s first millionaire and made most of his money by selling tools to prospectors searching for gold. In the case of AI, the companies that most resemble the shovel salesman are the hardware companies that build the very tools that are needed for both AI training and inferencing.

AMD and Nvidia

For GPUs that is Nvidia and AMD. AMD triggered my interest since it was left behind Nvidia in the whole debate about GPU powerhouses and it overtook Intel as the main innovator in terms of CPU development. Learn more about AMD here:

The revenue growth of Nvidia is mind-blowing. It is unheard of, that a company of the size of Nvidia can keep growing that fast. Ndivia’s earnings release attracts a cult-like following like some sports events.

Despite the revenue growth both companies experienced in the recent quarters, I believe there is much more to come. AI requires the most cutting-edge technology and none of the large software players want to fall behind.

Taiwan Semiconductor and ASML

If we go on step back, the companies that build these GPUs are Taiwan Semiconductor and its most important supplier ASML. Taiwan Semiconductor dominates the chip manufacturing process and left its largest competitors Samsung and Intel behind. The company is so important to the whole (Western) world, that the US would most likely defend Taiwan based on this. There is even a term for this, called the “silicon shield”.

There is exactly one company in the world that can build the most advanced machines for next-gen chip making. This is the Dutch company ASML. You will have a hard time finding a larger moat than the one of ASML. ASML is a fantastic company and one of the very few European companies that are a true force in the field of technology. I wrote about the investment case of ASML here:

Arista

If we consider all those beautiful CPUs and GPUs, we need a company that links all of these together. Enter Arista, the dominant player in networking. Arista was my pitch on my Omaha trip this year and you will find the whole investment case here.

Moving from hardware to software we can follow the paradigm: “data is the new oil”. The following companies might seem very obvious choices but they offer so much more than most investors are aware of.

Alphabet

There is barely a company in the world that will know more about you than Alphabet. You are using Google Search to answer your questions, navigating with Google Maps, watching your favorite videos on YouTube, using Waymo to get from point A to B, storing your data in Google Cloud, collaborating with your friends and colleagues via Google documents, conversing with Google Mail and much more. I guess Alphabet knows you better than some of your friends do.

The true value of this data treasure was recently showcased by the release of the AI video generator Veo 3. The uncountable hours of video material on YouTube certainly helped to train Veo 3. If you haven’t seen any videos generated purely by AI, prepare your mind to be blown away. Make sure to learn more about Veo 3 here: https://deepmind.google/models/veo/

Alphabet is often considered to be on the losing side in the AI race since search might get disrupted by people using AI. So far this has not proven to be the case and search keeps growing. At the same time, the AI of Alphabet (named Gemini) gets very positive feedback. Formerly known as Bard and off to a very bad start it has gained very positive feedback in recent months. On Polymarket (a betting website), Google has a strong lead over its competitors and has gained traction since April.

Meta

If you want to learn more about Meta and two more winners including my conclusion please subscribe to support my work.

To all existing subscribers: Thank you for your support! :)

Keep reading with a 7-day free trial

Subscribe to 41investments’s Substack to keep reading this post and get 7 days of free access to the full post archives.