AMD Stock Analysis & Deep Dive

Perfectly positioned to profit from the rise of AI and the worlds hunger for more computing power.

Intro

When it comes to AI beneficiaries and hardware for AI applications, NVIDIA has gotten all the spotlight. NVIDIA’s share price has significantly contributed to both the S&P500 and the NASDAQ this year and the earnings releases have become a true media spectacle. But today we will not talk about NVIDIA, instead, we will focus on AMD. AMD has long been in the large shadow of Intel but has recently surpassed Intel in both market cap and fundamental performance. At the same time, AMD has taken large steps to become one of the dominant players in the (AI-) data center market and it is time that we take a look at AMD.

For those not familiar with the semiconductor industry a short intro in some terms that are common and which I will also use:

A CPU (central processing unit) is the “brain” of a computer and performs as the name says most of the processing tasks. A CPU usually works in conjunction with a GPU (graphics processing unit) and RAM. A GPU is designed to accelerate the rendering of videos and images. Due to this strength, it is very good at handling multiple processing steps at once it is used these days heavily in machine learning and AI cases.

The Company

AMD (short for Advanced Micro Devices) is an American high-tech, semiconductor company based in Santa Clara, California, and employs more than 26,000 employees. AMD was founded in 1969 and already became a public company in 1972.

AMD describes itself like this:

“We are a global semiconductor company primarily offering: server microprocessors (CPUs), graphics processing units (GPUs), accelerated processing units (APUs), data processing units (DPUs), Field Programmable Gate Arrays (FPGAs), Smart Network Interface Cards (SmartNICs), Artificial Intelligence (AI) accelerators and Adaptive System-on-Chip (SoC) products for data centers.”

AMD describes its strategy as follows:

“Our strategy is to create and deliver the world’s leading high-performance and adaptive computing products across a diverse set of customer markets including data center, client, gaming and embedded.”

not surprisingly, AMD focuses on AI

“We believe that AI capabilities are central to products and solutions across our markets and we have a broad technology roadmap and products targeting AI training and inference spanning cloud, edge and intelligent endpoints.”

Writing a deep dive takes me 40+ hours to get a proper understanding of the company and the attributes of the industry it is working in. You will support me a great deal if you a) subscribe to this substack and b) recommend this blog to your friends and family. To all existing subscribers: Thank you for your support! :)

The Industry

The semiconductor industry got the awareness of the public latest with the shortage of chips in 2021. Beforehand many people were not aware, of the fact how many chips are used in all kinds of devices from fridges to cars. The integrated circuit was invented in 1948 and since then the industry only knew one way: Straight and up to the right.

Intel has been struggling in the last years and AMD has been one of the largest profiteers. For the first time since 2006, AMD might go above 40% market share in the CPU market. Notice how Apple started with its M1 chip in 2020.

GPUs have seen tremendous growth in the last years, mostly due to the rise of their usage in AI-related cases. Even more important than the past growth is the expected growth in the years to come. While I am not interested in where exactly the market will be in 2033, I believe this chart gives a good indication of what is bound to happen and why companies such as NVIDIA and AMD are so interesting.

NVIDIA’s stock price serves as a good illustration of the growth of the GPU market in the last few years.

The increase in the share price was driven by the increase in sales and income. It is unheard of, that a company the size of NVIDIA just doubles their net income starting from a basis of $30 billion.

AMD has seriously tackled the server-based GPU market with the release of the MI300 chip (more on that later). The following part of the Q3/2024 earnings call serves as a good intro to the rest of the article. Analyst Harsh Kumar was referring to Nvidia.

“In the coming year, let's say, 2025, your key competitor will take most of the TAM of the AI market, the GPU market, rough count, they'll take in something like $50 billion, $60 billion, you'll get another $5 billion to $10 billion, call it. So the question is, what do you think is the major hindrance? You've got chip level compatibility. So does it boil down to the fact that you're just earlier in the game. You've been doing this just 12 months in a serious manner? Or is there still a rack level of disparity? If you could just help us think about what the hindrances are to you becoming a major player here.”

AMD CEO Lisa Su answered:

“Maybe let me say, I view them as opportunities. If you remember, Harsh, and I think you do, our EPYC ramp from Zen 1, Zen 2, Zen 3, Zen 4 we had extremely good product even back in the Rome days, but it does take time to ensure that there is trust built, there is familiarity with the product set. There are some differences, although we're both GPUs, there are some differences, obviously, in the software environment. And people want to get comfortable with the workload ramp.”

“So from a ramp standpoint, I'm actually very positive on the ramp rate. It's the fastest product ramp that I've seen overall. And my view is this is a multi-generational journey. We've always said that. We feel very good about the progress I think next year is going to be about expanding both customer set as well as workload. And as we get into the MI400 series, we think it's an exceptional product. So -- all in all, the ramp is going well, and we will continue to earn and -- earn the trust and the partnership of these large customers.”

“What I will say is customers are very, very open to AMD. And we see that everywhere we go, everyone is giving us a very fair shot at earning their business, and that's what we intend to do.”

The Business

AMD has 4 reporting segments:

Data Center: these are server CPUs, GPUs, APUs, DPUs, FPGAs, SmartNICs, AI accelerators and Adaptive SoC products

Client segment: CPUs, APUs, and chipsets for desktop, notebook, and handheld personal computers

Gaming segment: discrete GPUs, semi-custom SoC products, and development services

Embedded segment: embedded CPUs, GPUs, APUs, FPGAs, SOMs, and Adaptive SoC products

To go a bit deeper into the different segments:

Data Center

The data center segment has been growing strong and companies like Microsoft, Alphabet, and Amazon have spent billions in the last years to build up their data centers. Data centers have special needs for CPU and GPUs and you cannot just simply reuse a CPU from a laptop and use it in a data center.

The fifth generation of AMD EPYC CPUs is already being built using 4nm and 3nm technology. Sidenote: All these processors are built by Taiwan Semiconductor and Taiwan Semi in turn buys the machines for building these chips from ASML. Check out ASML if you haven’t done so already. You will find some more information on ASML in my Best Buys article:

Best Buys December 2024

In this short format, I want to present to you companies and stocks, which are currently valued at an attractive level. Writing a deep dive takes me many hours and with this format, I can introduce you to interesting ideas as of today.

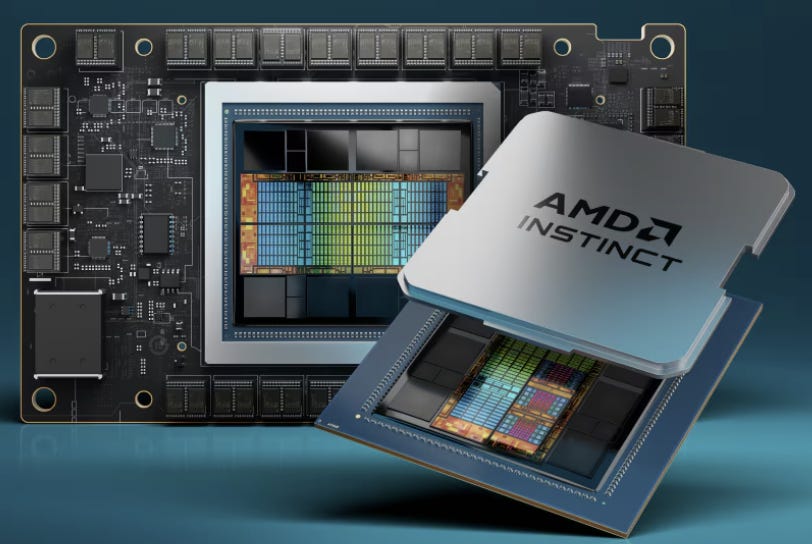

AI models and their requirements to compute many different instances in parallel make GPUs way better equipped to handle the workload than CPUs. NVIDIA is the best-known provider of GPUs and AMD focuses on GPU accelerators with its Instict MI200 and MI300 series. The MI300 was the fastest product in AMD history to surpass $1 billion in revenue and it took just two quarters after launch to achieve this milestone. In the third quarter after launch, the quarterly revenue already exceeded $1 billion in revenue and for the whole year, AMD expects $5 billion in revenue for the MI300. One big reason was Microsoft, which uses MI300 GPUs for Azure and also ChatGPT. Meta announced, that the most advanced version of its Llama AI runs exclusively on MI300X GPUs. to serve all live traffic. As of 2024, most of the MI300 GPUs are currently used for AI inference but due to rapid training adoption, AMD expects that within a year the mix of inference and training is 50/50.

In case you wonder what exactly inference and training is: Inference is where capabilities learned during deep learning training are applied. Training must happen first and without training, inference can't happen.

The downside of this fast rampup is, that the MI300 margins are now lower than the rest of the portfolio. Over time when the product is more established, AMD’s management believes that it can improve AMD’s margin.

The data center segment is getting ever more important for AMD and combined with the embedded segment contributed more than 50% of total revenue in 2023. AMD has been gaining market share at a rapid pace. Just a couple of years ago, AMD had a low single-digit market share for server CPUs and managed to increase its market share for server CPUs to 34% this year.

“Importantly, Data Center and Embedded segment annual revenue grew by $1.2 billion and accounted for more than 50% of revenue in 2023 as we gained server share, launched our next-generation Instinct AI accelerators and maintained our position as the industry's largest provider of adaptive computing solutions.”

All the large cloud players are customers of AMD as this quote from CEO Lisa Su on the Q4/2023 earnings call illustrates

“Amazon, Alibaba, Google, Microsoft and Oracle brought more than 55 AMD-powered AI, HPC and general-purpose cloud instances into preview or general availability in the fourth quarter. Exiting 2023, there were more than 800 EPYC CPU-based public cloud instances available.”

Next to the obvious cloud providers as mentioned above, companies such as Uber and Netflix also run their cloud instances based on AMD CPUs to handle their customer-facing workloads. Social media behemoth Meta has deployed more than 1.5 million (!) EPYC CPUs in their global data centers to run its social media platforms. CFO Jean Hu elaborated on this topic at the Barclays Annual Global Technology Conference in December 2024:

“when you look at the cloud native workload from Shopee, Amazon, social media, Facebook, WhatsApp to video streaming Netflix or Zoom. All those things are powered by general compute. That's the CPU. So we do see like, okay, AI is going to growing much faster, but the demand for fundamental applications when everybody increasing engagement in their platform is going to be continue to grow.”

“We can provide more performance. So we have been supporting the continued demand increase but overall, it is a very large market. We continue to see strong demand in cloud. We see modernization, we see the limitation on space and the power. So customers actually needed to upgrade. They also see their platform engagement is increasing.”

“They need more CPUs in enterprise, we also start to see the early signs of refreshing cycle. It's the same logic. It's -- you need more compute to support your applications, but you have a data center and the power limitation. So you want to get the best TCO from your suppliers. That's why we do think this CPU market and not only it's going to grow, but also we're going to continue to be able to gain share because of the performance.”

When you read the news about the insane amount of energy needed to power the most recent data centers you know, how important energy efficiency is in the market. AMD states the efficiency of their chips as one of the reasons why customers choose AMD.

“Given our high core count and energy efficiency, we can deliver the same amount of compute with 45% fewer servers compared to the competition, cutting initial CapEx by up to half and lowering annual OpEx by more than 40%. As a result, enterprise adoption of EPYC CPUs is accelerating, highlighted by deployments with large enterprises, including American Airlines, DBS, Emirates Bank, Shell and STMicro.”

The world’s fastest supercomputer “El Capitan” is powered by AMD Instinct MI300A accelerators. Taken from Wikipedia.

“El Capitan uses a combined 11,039,616 CPU and GPU cores consisting of 43,808 AMD 4th Gen EPYC 24C "Genoa" 24 core 1.8 GHz CPUs (1,051,392 cores) and 43,808 AMD Instinct MI300A GPUs (9,988,224 cores).”

This supercomputer can do 2,000 Petaflops or in other words 2,000,000,000,000,000,000 calculations per second.

An interesting side story: AMD names its processors after cities and currently they are named after Italian cities. The most recent CPUs bear the names Genoa, Bergamo, Siena, and Turin.

Client segment

These are the CPUs and GPUs you as an end-consumer will most likely interact with. Your standard laptop has either an Intel or an AMD CPU and quite often a GPU or in some cases an APU (CPU and GPU combined on a single chip). In most cases, the APU is enough since most desktop workers don’t have that much computing power for MS Teams and Outlook, Excel, PowerPoint, etc. AMD has been growing its market share in the desktop market at the expense of Intel since 2016 and is getting close to overtaking Intel.

In comparison to desktop CPUs, notebook CPUs pose a higher demand on energy efficiency so that your laptop doesn’t run out of energy after 2 hours of usage.

Gaming segment

Within the gaming segment, AMD also clusters its professional GPUs, which are mostly used for 3D rendering, design, and manufacturing CAD as well as media and entertainment broadcasting.

That’s how I got to know the names of Intel, AMD, and NVIDIA many years ago. Looking back, I could bite my rear part of not investing in NVIDIA. Back then there was always the discussion whether Intel or AMD was leading in CPUs. These days GPUs have become the most important unit to render images in a faster and better quality than ever before. In the important console market, AMD provides the RDNA graphic architecture for both the PlayStation 5 and the Xbox series S and X. AMD also works closely with Valve to create the semi-custom APU for the Steam Deck handheld device.

According to this ranking from Tom’s Hardware, AMD is eating Intel’s lunch when it comes to the performance of gaming CPUs. The AMD Ryzen CPUs dominate the list, while Intel’s first CPU only shows up at the sixth spot.

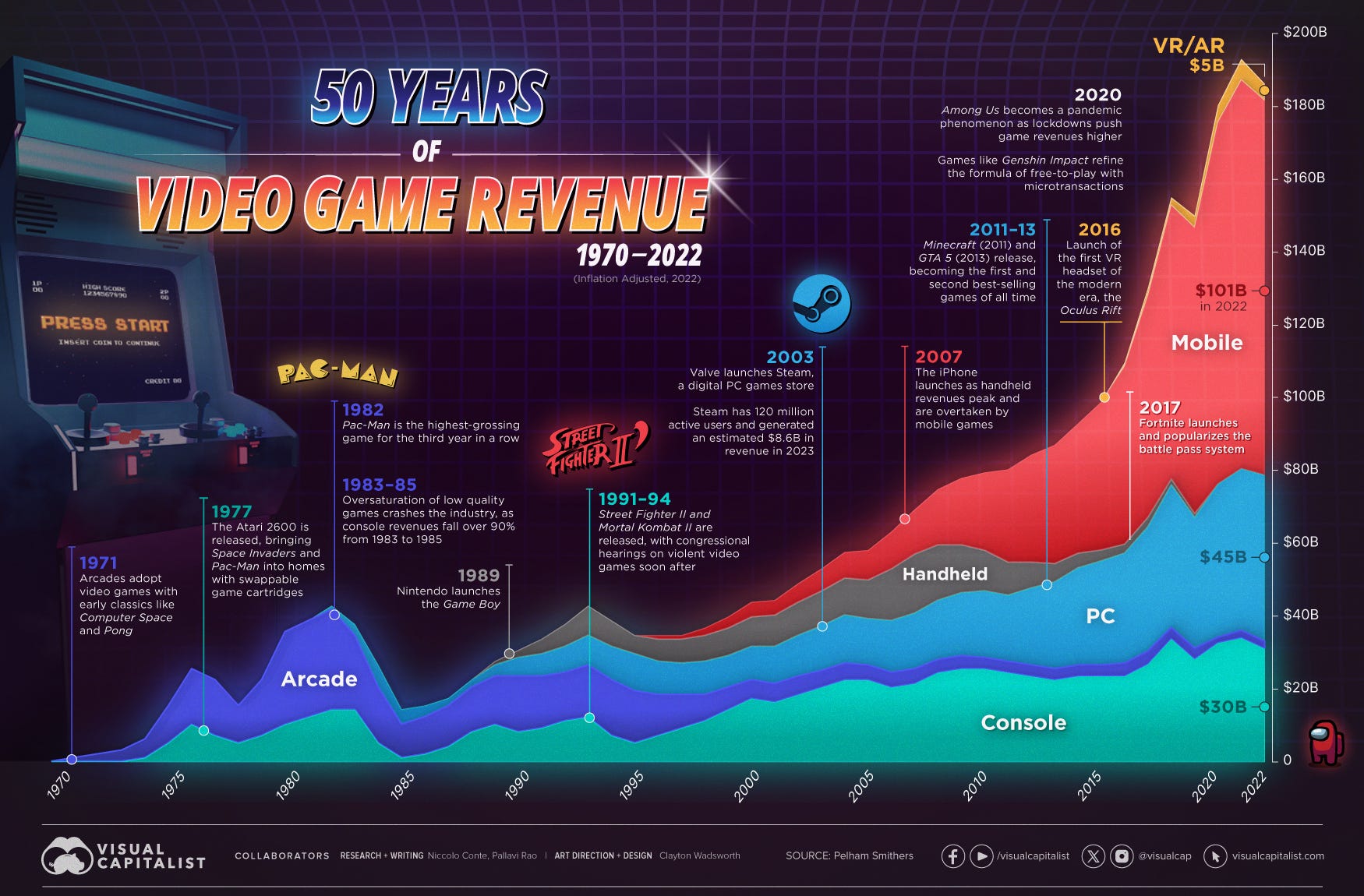

While researching for this article I came across this interesting graph: The mobile market has seen insane growth due to all the micro transactions and casual games. With the rise of computing power on mobile phones, handheld devices such as the Gameboy or PlayStation Portable disappeared. The PC has shown a strong comeback partly due to the rise of game platforms such as Steam.

Embedded segment

The embedded segment focuses on processors and systems on chips which are computing devices that perform specific tasks as part of a larger system. This includes automotive, industrial, networking, aerospace, defense, and many more sectors. Depending on the use case, these systems are specialized to be either very small, have low power consumption, or 24x7 runtime.

If you want to read the full article and learn about the management, the composition of the segments, takeovers, risks, fundamental analysis, and a conclusion please subscribe and support my work. To all existing subscribers: Thank you for your support and enjoy the rest of the article!

Keep reading with a 7-day free trial

Subscribe to 41investments’s Substack to keep reading this post and get 7 days of free access to the full post archives.